Apple Devices Offer Amazing Speech to Text Transcription in Developer Betas, Shows Test

06/19/2025

1420

If you ever need to transcribe audio or video to text, most current apps are powered by OpenAI’s Whisper model. You’re probably using this model if you use apps like MacWhisper to transcribe meetings or lectures, or to generate subtitles for YouTube videos.

But iOS 26 and Apple’s other developer betas include the company’s own transcription frameworks – and a test suggests that they match Whisper’s accuracy while running at more than twice the speed …

If you’ve ever used the built-in dictation capabilities of any of your Apple devices, this is handled by Apple’s own speech framework. In the new betas, there are beta versions of SpeechAnalyzer and SpeechTranscriber which developers can use in their own apps.

- Use the Speech framework to recognize spoken words in recorded or live audio. The keyboard’s dictation support uses speech recognition to translate audio content into text. This framework provides a similar behavior, except that you can use it without the presence of the keyboard.

For example, you might use speech recognition to recognize verbal commands or to handle text dictation in other parts of your app. The framework provides a class, SpeechAnalyzer, and a number of modules that can be added to the analyzer to provide specific types of analysis and transcription. Many use cases only need a SpeechTranscriber module, which provides speech-to-text transcriptions.

MacStories‘ John Voorhees asked his son to create a command-line tool to test this new capability, and was incredibly impressed by the results.

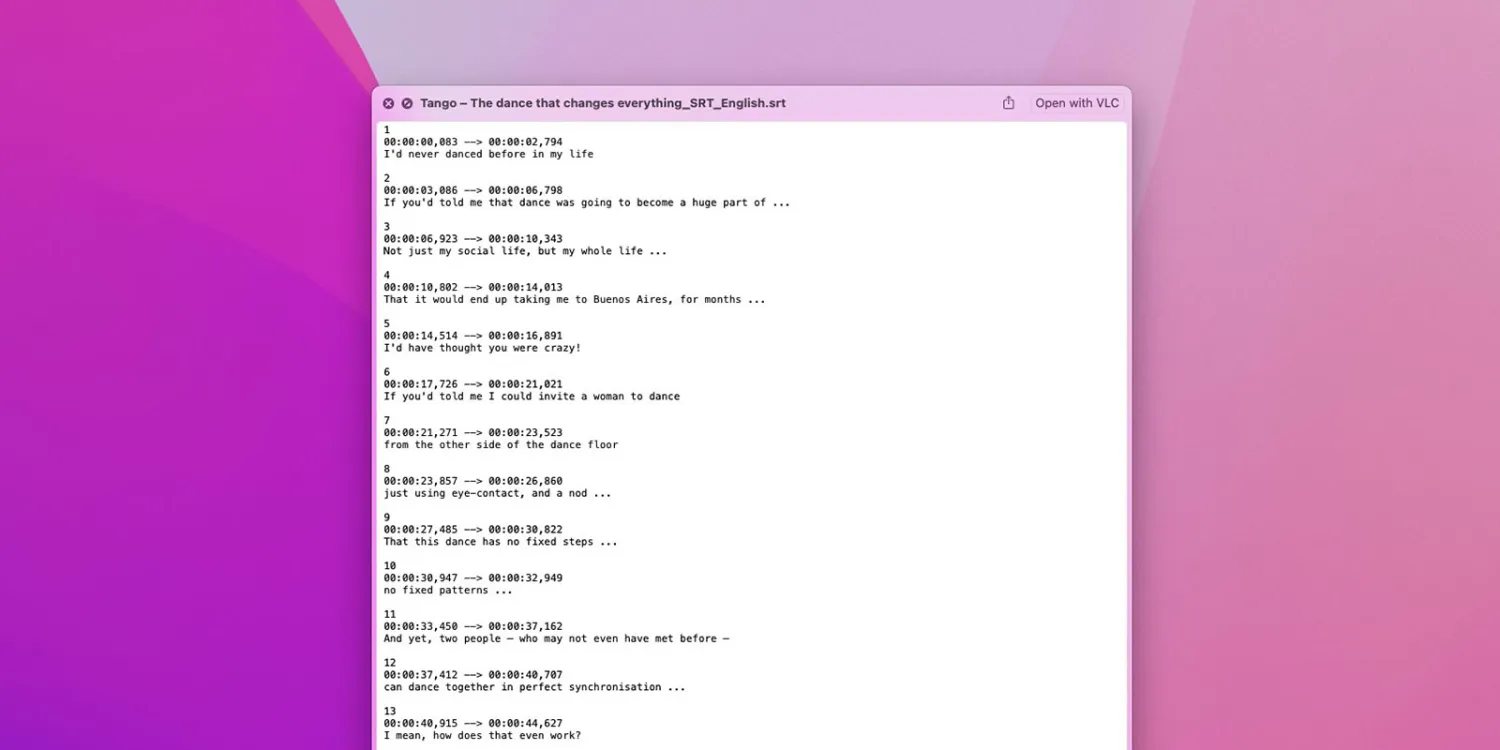

- I asked Finn what it would take to build a command line tool to transcribe video and audio files with SpeechAnalyzer and SpeechTranscriber. He figured it would only take about 10 minutes, and he wasn’t far off. In the end, it took me longer to get around to installing macOS Tahoe after WWDC than it took Finn to build Yap, a simple command line utility that takes audio and video files as input and outputs SRT- and TXT-formatted transcripts.

He used a 34-minute video to test it against both MacWhisper and VidCap, two of the most popular transcription apps. He found the Apple’s modules matched the accuracy of these, but was more than twice as fast as the most efficient existing app, MacWhisper running the Large V3 Turbo model:

| App | Transcription Time |

|---|---|

| Yap (using Apple’s framework) | 0:45 |

| MacWhisper (Large V3 Turbo) | 1:41 |

| VidCap | 1:55 |

| MacWhisper (Large V2) | 3:55 |

He argues that while this might seem a relatively trivial improvement for one-off tasks, the differences will quickly add up when performing either batch transcriptions or needing to transcribe files very regularly, like students with lecture notes.

Source: 9to5mac